You’ve seen it at your company: initiatives rolled out without proper planning, only to fail or flounder. Fortunately, you and your CEO know what to do. Let’s quickly form a data science team!

A year later, your data science team dissolves. What happened?

Seasoned data science advisors warn that most companies are not ready to build a data science function when they think they should.¹ Data scientists are starting to agree with those advisors.² Building a data science practice requires careful seeding and planning, because of the discipline’s heavy interdependence with other teams and systems, and also because of the long-lasting repercussions of decisions made early on.

In this article we’ll discuss the conditions necessary for a data science practice to succeed and provide exercises to help get you there.

I. Priming: Laying Groundwork

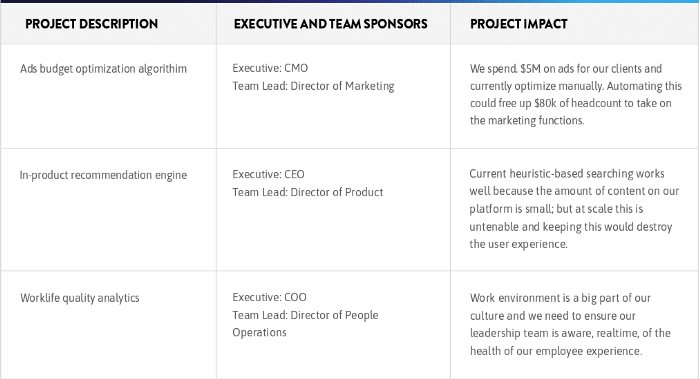

For a data science practice to flourish, its founders need to do two things at the outset: a) identify the clear business value the team will bring to the company, and b) confirm that the team has buy-in from other teams and executives it will be affecting. To establish both, we recommend developing a list of every possible data science project; going through every team that could benefit from data science, and within each team, separating projects by internal- and external-facing.³ After creating a master list of projects, fill out the following for each project:

Stack rank the projects by impact and meet with potential executive and team sponsors for each to see whether or not they’re interested in and have the bandwidth to support on the project. While tempting to overlook, make sure you have an executive sponsor on each; when push comes to shove they’ll make or break your project by prioritizing valuable resources you may need to successfully execute the data project.

For those projects that have everything above, move onto Planning.

II. Planning: Developing a Roadmap

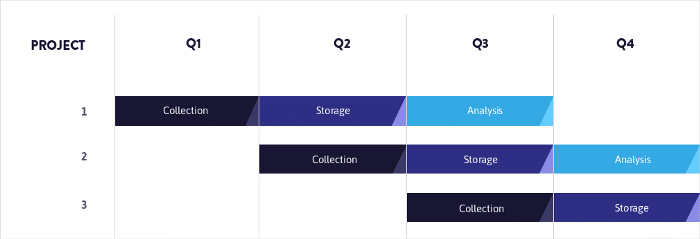

Example Gannt Chart

Within a single project there will be roadblocks and therefore optimizations you can make. Drawing a roadmap will both de-risk and accelerate your building a data science practice.

A roadmap should include objectives (goals of each project), milestones (discrete deliverables on the way to completing a project), timelines (when work should be completed), and resources (what and who you need to complete your projects). We recommend visualizing your projects as a Gannt Chart so you can see the building blocks of projects laid out over time.

As in the Gannt Chart above, imagine each data science project you’re doing has three essential phases: collection, storage, and analysis. If two projects share the same data sources, you can optimize work streams by working with a data engineer to collect and store the data in one shot. Similarly, by identifying that two work streams require the same analyst at the same time, you can decongest the project by phasing the work out instead of double booking the analyst with two big projects.

After identifying who and what your projects require, at what times, you are in a position to make the case for resources for your projects.

III. Resourcing: Supporting Data Efforts

Data science “fuel” comes in three forms: data, people, and money.

A. Data

One of the biggest and most-often written about challenges in data science is ensuring you have high quality data. This means enough data to reliably solve a problem in a reproducible, generalizable manner. In some cases you’ll need tens of thousands of observations to achieve a statistically significant result. In other cases, just hundreds of observations of high and reliable quality that measure the phenomenon you’re interested in can be good enough. Here are the markers of data quality to look out for:

- Accessible. Data needs to be accessible such that data scientists aren’t spending time searching for it or building substantial infrastructure to get data.

- Consistent. Data doesn’t change unexpectedly, such that analyses become difficult to reproduce and lose credibility at the whim of systems that collect and serve data.

- Accurate. Data should map to a real world truth or phenomenon that you are trying to analyze. When a piece of data says a company is headquartered in Texas, it should be in Texas.

- Big [Enough]. There should be enough data to power your project, meeting a standard of rigor you have accepted ahead of the analysis. Examples of standards of rigor include: “accuracy above X,” statistical significance, or “passes a sniff test.”

- Complete. Your data should be complete in both breadth and depth. Depth means, within each field or column there should not be disruptive⁴ amounts of missing data. Breadth means the data should have the fields necessary to solve the problem at hand.

Even with state-of-the-art infrastructure and a talented data science team, low quality data will yield low quality output.⁵

B. Support

Data projects are cross-functional, especially when working with systems that handle data. The most common and important complementary technical support roles are Data Engineering⁶ and DevOps.⁷

- Data Engineering builds data pipelines, maintains data infrastructure, and sometimes implements or co-develops machine learning algorithms. They would help manage databases, move data reliably between locations, ensure data quality, provide access to data, and implement machine learning algorithms.

- DevOps manages tools and practices that increase the company’s ability to execute more efficiently. They would provision access to data systems, give access to tools, and provide general technical support.

Without this support, the job will fall onto a data scientist whose training and skill set is meaningfully different from these complementary roles. Except in the case of the unicorn data scientist,⁸ requiring someone without these skills to take them on will almost invariably lead to frustration and demoralization.

C. Budget

Budgeting will allow you to have the people and tools you need at the times you need them. To help set expectations, here are some recent numbers:

- Team. Above is a table of average annual base salaries by job function in San Francisco. This does not include equity compensation, benefits, and other costs associated with hiring, which vary wildly depending on company size and compensation policy.

- Tools. Data systems and tools including ETL, database, data exploration, data reporting, and machine learning tools. Any of these can cost $0, $1,000, or $10,000 per month, for a small scale implementation. We recommend doing several sales calls with vendors and a technical team member to determine what the cost would be for your use case.

If you have verified that the available data supports your projects, identified people who can provide technical support, and set aside budget for team and tools, you are in a strong position to start the data science team at your company.

Next Steps

So, are you ready to build a data science team? You’ve read through this article and have the core concepts in place. Below is a quick review of those concepts and some exercises you can do to be on your way:

Priming. Identifying value and buy-in.

- Create a master list of data science projects by team/department. If it helps, separate projects by internal- and external-facing projects.

- Go through the table, identifying executive sponsors and project impact.

- Stack rank the projects by impact.

Planning. Roadmapping your projects.

- Lay the projects out over time, listing objectives, milestones, timelines, and resources in order to think about what you’ll need to do and when.

- Identifying overlapping and non-overlapping elements

Resourcing. Ensuring you have what you need to get the job done.

- Data. Confirm that existing and new data meet the criteria in the checklist in III.A. If not, create a plan to address the deficiencies.

- Support. Ensure the people in the roles listed in III.B. are available to help; and if not, figure out where you can find people to do those jobs.

- Budget. Estimate how much people and tools will cost for your projects. The lists in III.C. should provide general estimates.

If you think you’re ready to find someone to start a data team, see our article Am I Ready for a Full-Time Data Scientist? And if you have any questions, please reach out to us directly through www.stratusdata.io.

—

² Medium: Why So Many Data Scientists are Leaving Their Jobs

³ Internal-Facing projects are ones where the goal is to enhance the experience of the employee. Examples include a lead scoring algorithm that help sales people sort through hundreds of leads, or dashboards that help managers see the health of their teams at any given time.

External-Facing projects are those that enhance the experience of the customer. Examples include recommendation engines that serve products, or pricing algorithms that price goods for retail customers.

⁴ Disruptive means it leads to uninterpretable or meaningless results, disables important analyses, or simply give reason for concern about the data source as a whole.

⁵ Garbage In, Garbage Out: How Anomalies Can Wreck Your Data

⁶ Data Scientist versus Data Engineer

⁷ Software Development (Dev) and Information Technology Operations (Ops)